|

SLIDE 22: TITLE PAGE OF HUME - "A TREATISE OF HUMAN NATURE"

Hume argued convincingly

that the WHY is not merely

second to the HOW, but that the WHY is totally superfluous as

it is subsumed by the HOW.

|

|

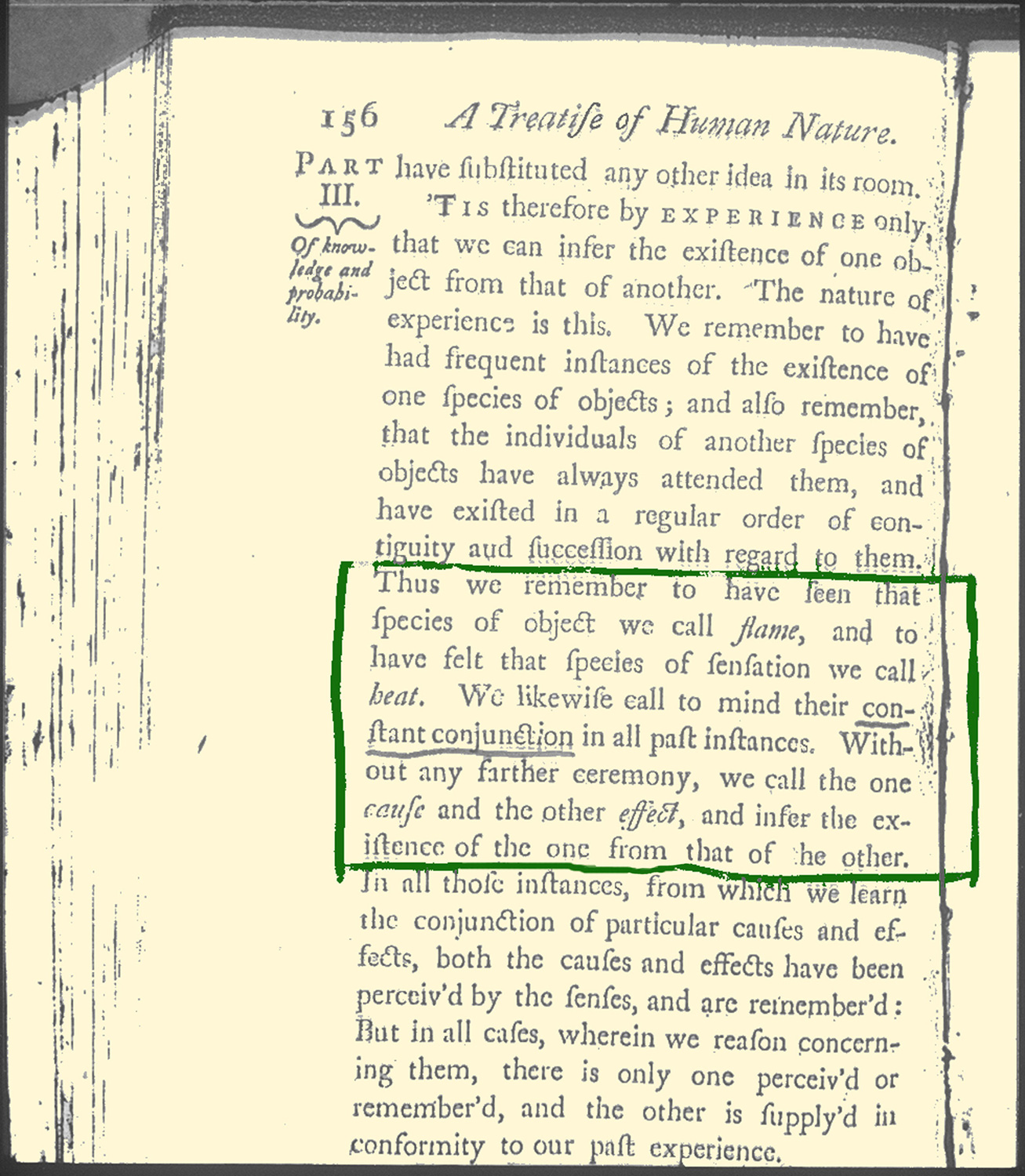

SLIDE 23: PAGE 156 FROM "A TREATISE OF HUMAN NATURE"

On page 156 of Hume's "Treatise of Human Nature", we find the

paragraph that shook up causation so thoroughly that it

has not recovered to this day.

I always get a kick reading it:

"Thus we remember to have seen that species

of object we call *FLAME*, and to have felt

that species of sensation we call *HEAT*. We

likewise call to mind their constant conjunction in

all past instances. Without any farther ceremony,

we call the one *CAUSE* and the other *EFFECT*,

and infer the existence of the one from that of the

other."

Thus, causal connections according to Hume are

product of observations. Causation is a learnable

habit of the mind,

almost as fictional as optical illusions and as

transitory as Pavlov's conditioning.

It is hard to believe that Hume was not aware of the

difficulties inherent in his proposed recipe.

He knew quite well

that the rooster crow STANDS in constant conjunction to the

sunrise, yet it does not CAUSE the sun to rise.

He knew that the barometer reading STANDS in constant

conjunction to the rain, but does not CAUSE

the rain.

|

Today these difficulties fall under the rubric of

SPURIOUS CORRELATIONS, namely "correlations that do not imply causation".

Now, taking Hume's dictum that

all knowledge comes from experience, that experience

is encoded in the mind as correlation, and

our observation that correlation does

not imply causation, we are led into

our first riddle of causation:

How do people EVER acquire knowledge of CAUSATION?

|

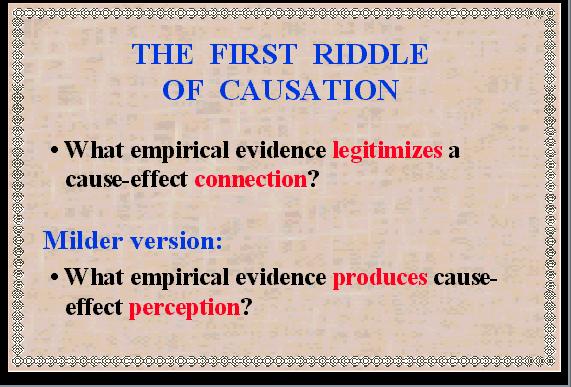

SLIDE 24: THE FIRST RIDDLE OF CAUSATION

We saw in the rooster example that regularity of succession

is not sufficient; what WOULD be sufficient?

What patterns of experience would justify calling

a connection "causal"?

Moreover: What patterns of experience

CONVINCES people that a connection is "causal"?

|

|

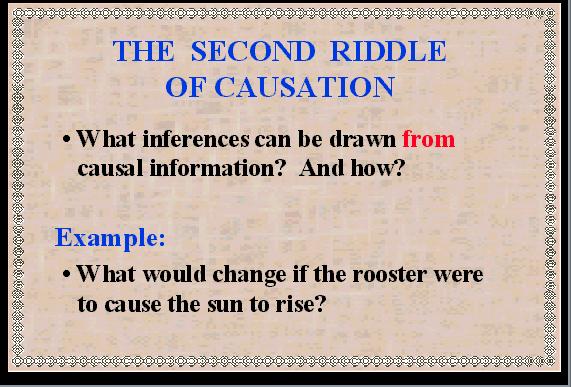

SLIDE 25: THE SECOND RIDDLE OF CAUSATION

If the first riddle concerns the LEARNING

of causal-connection, the second

concerns its usage:

What DIFFERENCE does it make if I told you

that a certain connection is or is not causal:?

Continuing our example, what difference does it make

if I told you that the rooster does cause the sun to rise?

This may sound trivial.

The obvious answer is that knowing what causes what

makes a big difference in how we act.

If the rooster's crow

causes the sun to rise we could make the

night shorter by waking up our rooster earlier and make him

crow - say by telling him the latest rooster joke.

|

But this riddle is NOT as trivial as it seems.

If causal information has an empirical meaning

beyond regularity of succession, then that information

should show up in the laws of physics.

But it does not!

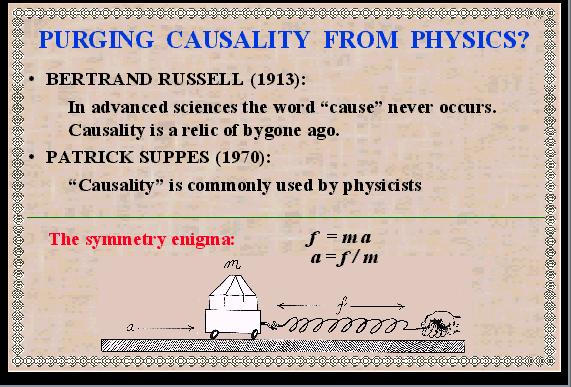

The philosopher Bertrand Russell made this argument in 1913:

|

SLIDE 26: PURGING CAUSALITY FROM PHYSICS?

"All philosophers, "says Russell," imagine that

causation is one of the fundamental axioms of

science, yet oddly enough, in

advanced sciences, the word 'cause' never occurs ... The law of

causality, I believe,

is a relic of bygone age,

surviving, like the monarchy, only because it is

erroneously supposed to do no harm ..."

Another philosopher, Patrick Suppes, on the other hand,

arguing for the importance of causality, noted that:

"There is scarcely an issue of *PHYSICAL REVIEW*

that does not contain at least one article

using either `cause' or `causality' in its title."

|

What we conclude from this exchange is that

physicists talk, write, and think one way and formulate

physics in another.

Such bi-lingual activity would be forgiven if causality

was used merely as a

convenient communication device - a shorthand for expressing

complex patterns of physical relationships

that would otherwise take many equations to write.

After all! Science is full of abbreviations:

We use, "multiply x by 5", instead of "add x

to itself 5 times"; we say: "density" instead of

"the ratio of weight to volume".

Why pick on causality?

"Because causality is different," Lord Russell would argue,

"It could not possibly be an abbreviation,

because the laws of physics are all symmetrical, going both ways,

while causal relations are uni-directional, going from cause to effect."

Take for instance Newton's law

f = ma

The rules of algebra permit us to write this law

in a wild variety of syntactic forms, all meaning

the same thing - that if we know any two of the three quantities,

the third is determined.

Yet, in ordinary discourse

we say that force causes acceleration - not that

acceleration causes force, and we feel very strongly

about this distinction.

Likewise, we say that the ratio f/a helps us DETERMINE

the mass, not that it CAUSES the mass.

Such distinctions are not supported by the

equations of physics, and this leads us to ask whether

the whole causal vocabulary is purely

metaphysical.

"surviving, like the monarchy...etc."

Fortunately, very few physicists paid attention to Russell's enigma.

They continued to write equations in the office

and talk cause-effect in the CAFETERIA, with

astonishing success, they smashed the atom, invented the transistor,

and the laser.

The same is true for engineering.

But in another arena the tension could not go

unnoticed, because in that arena the demand for

distinguishing causal from other relationships was very

explicit.

This arena is statistics.

The story begins with the discovery of correlation,

about one hundred years ago.

|

SLIDE 27: FRANCIS GALTON (PORTRAIT)

Francis Galton, inventor of fingerprinting and cousin

of Charles Darwin, quite understandably set out to

prove that talent and virtue run in families.

|

|

SLIDE 28: TITLE PAGE "NATURAL INHERITANCE"

These investigations, drove Galton to consider various ways of

measuring how properties of one class of individuals or objects are

related to those of another class.

|

|

SLIDE 29: GALTON'S PLOT OF CORRELATED DATA (1888)

In 1888, he measured the length of a person's forearm and the

size of that person's head and

asked to what degree

can one of these quantities predict the other.

He stumbled upon the following discovery: If you

plot one quantity against the other

and scale the two axes properly,

then the slope of the best-fit line has some nice mathematical

properties:

The slope is 1 only when one quantity can predict

the other precisely; it is zero whenever the prediction

is no better than a random guess and, most remarkably, the slope

is the same no matter if you plot X against Y or Y against X.

"It is easy to see," said Galton, "that co-

relation must be the consequence of the variations of

the two organs being partly due to common causes."

Here we have, for the first time, an objective measure

of how two variables are "related" to each other, based strictly

on the data, clear of human judgment or opinion.

|

|

SLIDE 30: KARL PEARSON (PORTRAIT, 1890)

Galton's discovery dazzled one of his students, Karl Pearson, now

considered the founder of modern statistics.

Pearson

was 30 years old at the time, an accomplished physicist

and philosopher about to turn lawyer, and this is how he describes,

45 years later, his initial reaction to Galton's discovery:

|

|

SLIDE 31: KARL PEARSON (1934)

"I felt like

a buccaneer of Drake's days -...

I interpreted that

sentence of Galton to mean that there was a category

broader than causation, namely correlation, of which

causation was only the limit, and that this new

conception of correlation brought psychology,

anthropology, medicine, and sociology in large parts

into the field of mathematical treatment."

Now, Pearson has been described as a person "with the kind of drive and

determination that took Hannibal over the Alps and

Marco Polo to China."

When Pearson felt like a buccaneer, you

can be sure he gets his bounty.

|

|

SLIDE 32: CONTINGENCY TABLE (1911)

1911 saw the publication of

the third edition of his book "The Grammar of Science".

It contained a new chapter titled

"Contingency and correlation - the insufficiency of

causation," and this is what Pearson says in that chapter:

"Beyond such discarded fundamentals as 'matter' and 'force' lies

still another fetish amidst the inscrutable arcana of modern science,

namely, the category of cause and effect."

|

|

SLIDE 33: KARL PEARSON (1934)

Thus, Pearson categorically denies the need for an

independent concept of causal relation beyond

correlation.

He held this view throughout his life and,

accordingly, did not mention causation in

ANY of his technical papers.

His crusade against animistic concepts such as "will" and "force"

was so fierce and his rejection of determinism so absolute that he

EXTERMINATED causation from statistics before it had

a chance to take root.

|

|

SLIDE 34: SIR RONALD FISHER

It took another 25 years and

another strong-willed person, Sir Ronald Fisher, for

statisticians to formulate the randomized experiment - the

only scientifically proven method of testing causal

relations from data, and which is, to this day, the one and only causal concept

permitted in mainstream statistics.

And that is roughly where things stand today...

If we count the number of doctoral theses, research

papers, or textbooks pages

written on causation, we get the impression that Pearson still

rules statistics.

The "Encyclopedia of Statistical

Science" devotes 12 pages to correlation but only 2

pages to causation, and spends one of those pages

demonstrating that "correlation does not imply

causation."

Let us hear what modern statisticians say about causality

|

|

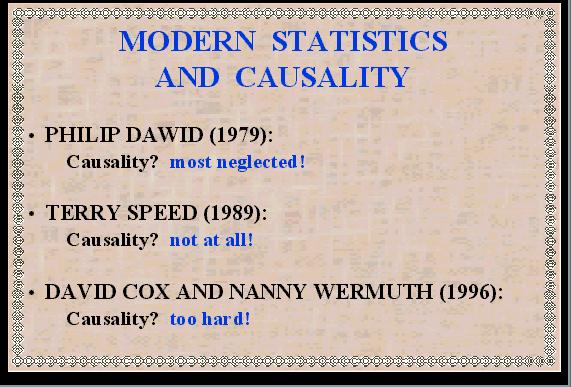

SLIDE 35: MODERN STATISTICS AND CAUSALITY

Philip Dawid , the current editor of Biometrika-the

journal founded by Pearson - admits:

"causal inference is one of the most important, most

subtle, and most neglected of all the problems of

statistics".

Terry Speed, former president of the Biometric Society

(whom you might remember as an expert witness at the

O.J. Simpson murder trial),

declares: "considerations of causality

should be treated as they have always been treated in

statistics: preferably not at all, (but if necessary, then

with very great care.)"

Sir David Cox and Nanny Wermuth,

in a book published just a few months ago, apologize as follows: "We did

not in this book use the words CAUSAL or CAUSALITY....

Our reason for caution is that it is rare that firm

conclusions about causality can be drawn from one

study."

|

This position of caution and avoidance has paralyzed many fields

that look to statistics for guidance, especially economics and social science.

A leading social scientist stated in 1987:

"It would be very healthy if more researchers

abandon thinking of and using terms such as

cause and effect."

Can this state of affairs be the work of just

one person? even a buccaneer like Pearson?

I doubt it.

But how else can we explain why statistics, the field that

has given the world such powerful concepts as

the testing of hypothesis and the design of experiment

would give up so early on causation?

One obvious explanation is, of course,

that causation is much harder to measure than

correlation.

Correlations can be estimated directly

in a single uncontrolled study, while causal conclusions

require controlled experiments.

But this is too simplistic;

statisticians are not easily deterred by difficulties and

children manage to learn cause effect relations

WITHOUT running controlled experiments.

The answer, I believe lies deeper,

and it has to do with the official language

of statistics, namely the language of probability.

This may come as a surprise to some of you but

the word "CAUSE" is not in the vocabulary of probability theory;

we cannot express in the language of probabilities the sentence,

"MUD DOES NOT CAUSE RAIN" - all we can say is that the two

are mutually correlated, or dependent - meaning if we find one,

we can expect the other.

Naturally, if we lack a language to express a certain concept

explicitly, we can't expect to develop scientific activity

around that concept.

Scientific development requires that knowledge be transferred

reliably from one study to another and, as Galileo has shown

350 years ago, such transference requires the precision

and computational benefits of a formal language.

I will soon come back to discuss the importance of

language and notation, but first,

I wish to conclude this

historical survey with a tale from another field in

which causation has had its share of difficulty.

This time it is computer science - the science of

symbols - a field that is relatively new, yet it has

placed a tremendous emphasis on language and notation and,

therefore, may offer a useful

perspective on the problem.

When researchers began to encode causal

relationships using computers, the two riddles of

causation were awakened with renewed vigor.

|

SLIDE 36: ROBOT IN LAB

Put yourself in the shoes of this robot who is trying to

make sense of what is going on in a kitchen or a

laboratory.

Conceptually, the robot's problems are the

same as those faced by an economist seeking to model the National debt

or an epidemiologist attempting to

understand the spread of a disease.

Our robot,

economist, and epidemiologist all need to track down

cause-effect relations from the environment, using

limited actions and noisy

observations.

This puts them right at Hume's first

riddle of causation: HOW?

|

|

SLIDE 37: ROBOT WITH MENTOR

The second riddle of causation also plays a role in the

robot's world.

Assume we wish to take a shortcut and

teach our robot all we know about cause and

effect in this room.

How should the robot organize and

make use of this information?

Thus, the two philosophical riddles of causation are

now translated into concrete and practical questions:

|

|

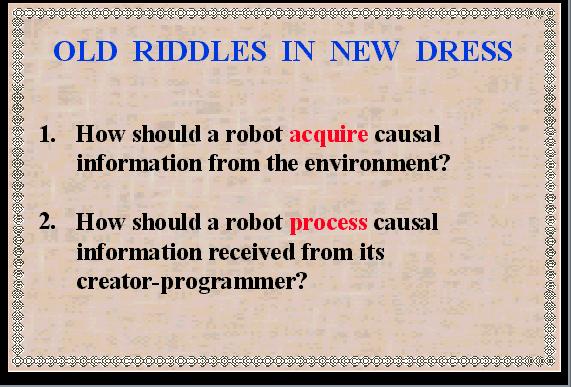

SLIDE 38: OLD RIDDLES IN NEW DRESS

How should a robot acquire causal information

through interaction with its environment?

How should a robot process causal information

received from its creator-programmer?

Again, the second riddle is not as trivial as it might

seem. Lord Russell's warning that causal relations and

physical equations are incompatible now surfaces as an

apparent flaw in logic.

|

|

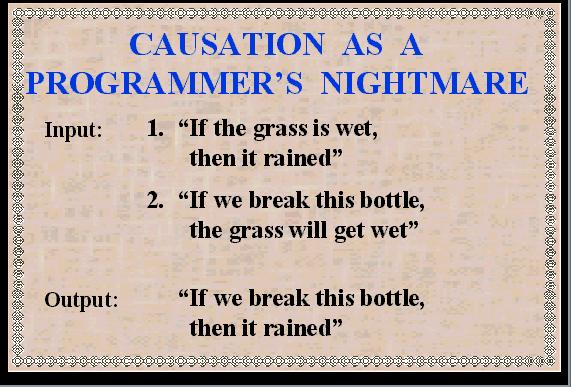

SLIDE 39: CAUSALITY: A PROGRAMMER'S NIGHTMARE

For example, when given the information, "If the grass

is wet, then it rained" and "If we

break this bottle, the grass will get wet," the

computer will conclude "If we break this bottle, then

it rained."

The swiftness and specificity with which such programming

bugs surface, have made Artificial-Intelligence programs an ideal laboratory

for studying the fine print of causation.

|

Continue

with Part 2